Meta-Prompting: Using LLMs to Improve How You Prompt LLMs

July 23, 2025

I have been developing with LLMs for some time now, and I thought it would be helpful to share some notes here and there. To be clear, I am by no means the leading expert. There are much more experienced folks out there who understand how to bend models to do some really cool things.

The focus of this post is meta-prompting - using language models to improve how you prompt language models. What does that mean? Naively, it means if you don't know how to effectively prompt an LLM, ask it! This is especially helpful when you are developing with LLMs and you need to pass prompts to the APIs.

Here's a simple example to illustrate my point. The first prompt is simple "Act like a historian and tell me an interesting event about the past". It chose a particular event and started telling me that. As a developer, I could pass an event to this prompt and it'll do the same.

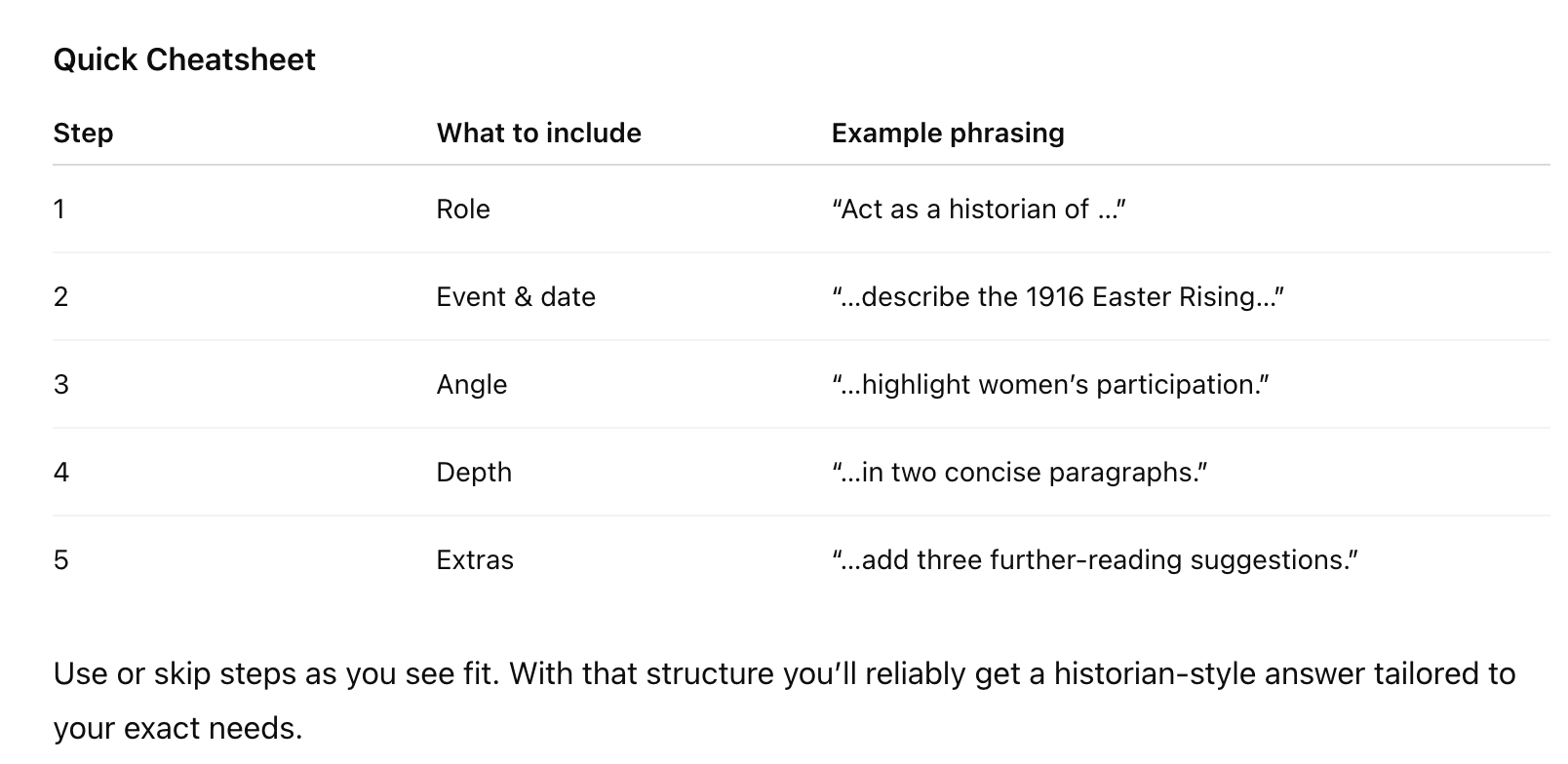

But this isn't the most effective, and there are a lot of assumptions being made within the prompt. Here's what happened when I prompted "how would i prompt you to act like a historian and tell me about a historical event"... I copied just the cheatsheet from OpenAI o3:

This gives you an idea of how you can prompt the LLM with more control and specificity, which can be helpful when developing experiences.

You can see this meta-prompting in action in certain products with the magic wand button in prompt boxes. They take a basic prompt and then turn it into a more robust one with some assumptions baked in. This is valuable for video gen products like Midjourney, where even the slightest change in wording can completely change the output. Several of the large foundation models even have prompt generator tools in their developer consoles.

With Kalen, I've been doing some basic meta-prompting with historical examples. I will find a gap where it might not properly reference some past goal, and I'll ask Claude Code how to improve the prompt so that it doesn't make that mistake again. This is an incredibly valuable feedback loop. My next steps are to figure out how to automate this - PromptHub has some interesting ideas with frameworks like DSPy (which manages multiple LLM calls for iterative refinement) and TEXTGRAD (using natural language feedback to refine outputs).

I've enjoyed getting familiar with meta-prompting and adding it to my AI development toolbelt. It's an effective tool in part because LLMs seem to be good at writing prompts. More broadly, LLMs are great for small, well contained problem spaces. If you give it something generic in a large problem space, it might not respond in the way you want. If you (or an LLM) break the problem down into smaller subproblems, then it can be more accurate. This is broadly how agents work, which I hope to dive into at some point!

If you want to dive deeper, here are resources for learning more about meta-prompting:

- OpenAI Cookbook: Enhance your prompts with meta prompting - Official OpenAI guide with practical examples

- Prompt Engineering Guide: Meta Prompting - Comprehensive technical overview

- PromptHub: A Complete Guide to Meta Prompting - Step-by-step walkthrough with examples

Subscribe to the Newsletter

No spam, unsubscribe anytime.