An Agent is Nothing Without its Tools

September 26, 2025

For the last couple of months, I have been struggling to understand what makes an agent. The industry is buzzing with the terms "agent" and "agentic" but no one could really define them. Fortunately, I recently came across a definition by Simon Willison that made sense. He defines an agent as the following: "An LLM agent runs tools in a loop to achieve a goal". What I like about this definition is that it's concise and concrete. It's not fluffy or salesy. It also breaks down the agent into its core components: the LLM, the tools, and the loop. In this post, I'm going to focus on the latter two pieces to help explain agents.

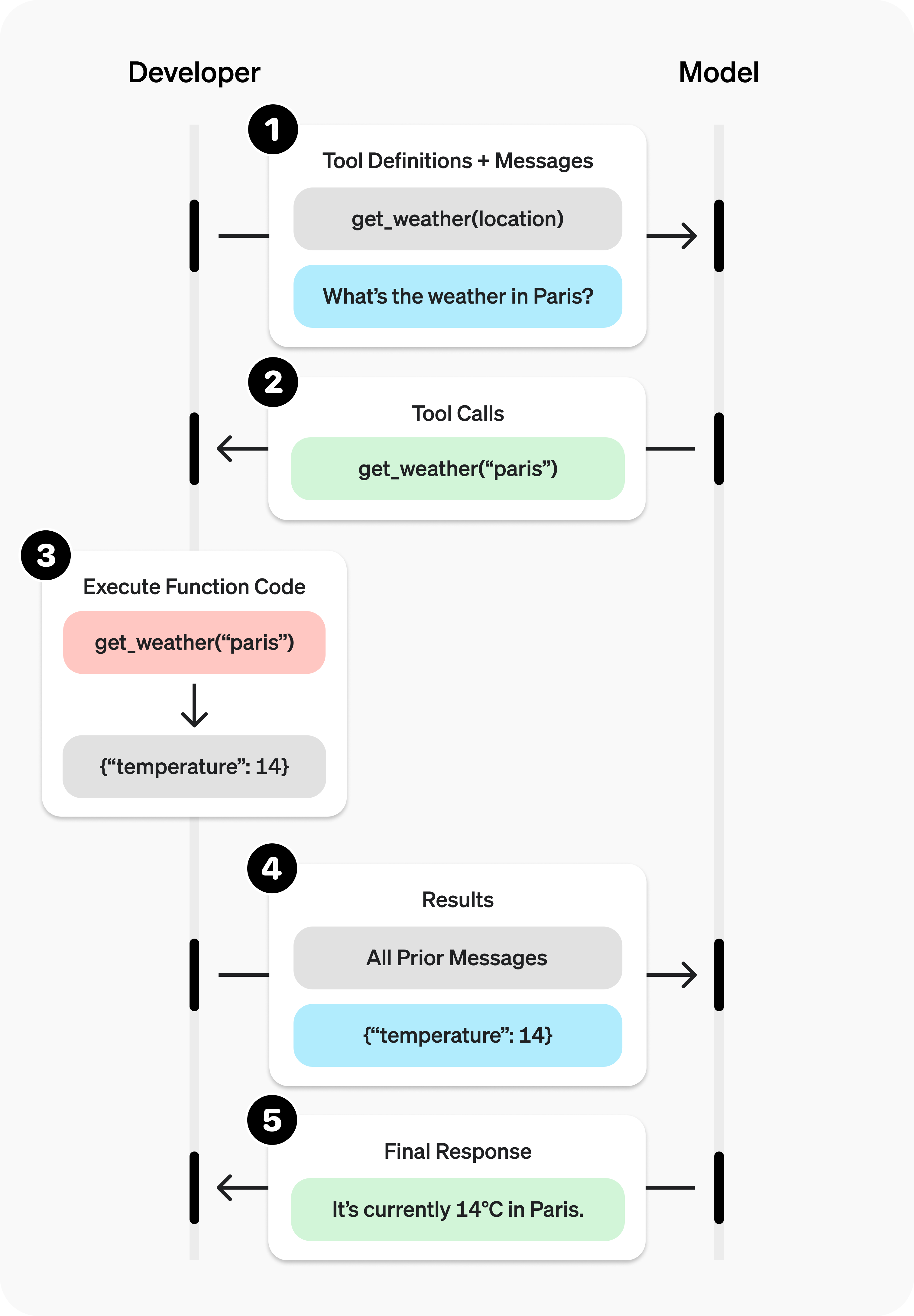

What are tools? In the openai / anthropic APIs, tools (or functions) are a standard to connect "functions" to the LLM. Under the hood, the tool names and descriptions are literally passed to the system prompt, and the LLM decides whether or not to use the tool based on the user's prompt. For example, if you ask an LLM about the weather, it's not going to know what the weather is because it doesn't have current data. If you give it a tool with a description that it can get the weather, it will decide that the tool is a way to get the data. The LLM believes the tool will help it build context to better achieve a goal. However, the LLM doesn't (and can't) execute the tool, it simply returns the name of the tool that needs to be called. This is where the overall system (or the agent) that uses the LLM also has to execute the tool calls and then, very importantly, call the LLM with the results of the tool. An agent is nothing without its tools.

The second component of the agent is the loop. If you connect an LLM to the external world via tools, then there is a possibility that the LLM doesn't "achieve its goal" after a given step and instead returns a tool call. So, the system needs to loop around the LLM until it returns a terminal response. This is interesting because it means that the LLM has more control. The application developer is providing context to the LLM via tool calls and letting the LLM decide what to do next. This is why workflows and agents are different. Workflows are predefined paths in which the application developer uses LLMs as nodes within the paths. So, the application developer has control. In an agent, there is no predefined path. The LLM could achieve the goal in all sorts of ways. It also can get stuck looping on tools forever, which is why the APIs allow you to terminate after a certain number of tool calls.

Here's a helpful diagram that visualizes both the tool calling and the loop components for a weather agent that can answer questions like "what's the weather in Paris":

Diagram courtesy of OpenAI

Diagram courtesy of OpenAI

Taking a step back, this is kind of crazy if you think about it. With some text (prompts), a loop, and descriptions of your business logic (tools), you can supercharge an LLM to do something like Claude Code. What's especially fun about this is that it's all language. The call to the LLM is in language and the LLM response is language. There's nothing really intelligent about it. The tool calls are text, which means that you can optimize your tool calls like you would your prompts and iterate on them to make sure that they are called at the right times.

So why build an agent? Going back to the definition, the agent has a goal it's working towards. For example, they can generate code or solve IT tickets. Existing user experiences don't quite do that. For example, an IDE helps the user write code, and it might prefill some code, but it doesn't generate the code or run the tests. Existing IT systems let you provision users or give access to applications, but an agent can actually do that on behalf of an IT admin. This is where the goal part of the definition comes in. The agent is achieving a goal. A lot of times that goal is not clearly defined at the start, and it can change.

Lastly, I think the term agent is a bit misleading. It implies agency, which is not really true. Assistant or intern might be better terms. You are working with it to give it all the information it needs to do its job, and you ideally give it a pretty simple task. You create guard rails and make sure it can't go off track. Agents are generally not that smart, and you have to give them a lot of context and hold its hand. It also tends to make a lot of mistakes or go down the wrong path. This is why I think there is a conversation on how best to improve agents. Things like evals, a/b testing, and prompt evolution (GEPA and DSPy), which I want to explore in a further post.

Subscribe to the Newsletter

No spam, unsubscribe anytime.